Modeling Expected Goals

In Part I, we took a deep dive into the data and trends of shots based on three key variables; the distance, angle and a categorical variable to identify headed shots. We developed an understanding of the distributions and probabilities associated with shots and goals by representing, transforming and visualizing the data. Here, we use this data to develop a model to predict goals.

Classification

Since our response variable (shot result) is categorical, we must apply classification methods to create a predictive model. To introduce this type of approach, let’s look at an illustrative example. Assume we plot a random selection of shots from our data and classify them based on their result, only taking the distance into account.

Figure 1

Above we can see that there is a clear distinction between the blue and orange clusters. If we assume this is the true nature of the relationship between shots and distance, and we want to predict future shot results based on this data, what model could we adopt?

Since the data is easily separable, we can draw a boundary line between the two clusters. This line will represent a discriminant function, which we will use to classify each shot outcome as a goal or miss. In the case above, the discriminant function maps out a distance r from the center of the goal.

This discriminant function, drawn in green, is defined by the equation 𝑦=αx+β, where x is the distance from goal and y is the binary response. Specifically for the data above, y = x -12. We can translate this into a classification model by applying the so-called Heaviside function to our discriminant, which will return a value of 0 or 1 as the response variable is based on the value of y:

The Heaviside function is a hard classifier and the simplest classification model, but it requires the data to be cleanly separable.

Figure 2

Figure 3

In reality, the data describing shots is not cleanly separable, making goals difficult to predict with any kind of certainty. Nevertheless, the Heaviside function serves as a natural progression into more advanced classification models.

If we take a real sample of the data, it might resemble the plot below.

Figure 4

In the event where the data is not cleanly separable, we must look to model the probability as opposed to assigning hard zero and ones. We have already seen in our data exploration that there are clear trends for the probability with respect to the distance and angle variables. What function should we adopt to model these trends? There are numerous functions that map out probabilities and fit non-separable data, but we use the logistic function (also known as the sigmoid function) due to its simplicity.

Similar to the heaviside function, the logistic function takes in our predictors (distance in this case), but instead outputs values between zero and one.

Figure 5: The logistic function takes any value in the domain (−∞,+∞)and produces a value in the range (0,1). Thus, given a value 𝑦, we can interpret G(y) as a conditional probability that the shot results in a goal, 𝐺(𝑦)≡Pr[label is 1|𝑦].

The logistic function is an S-shaped curve that changes in slope and trajectory based on the values of the coefficients. Now the question is, how do we use the logistic function to model our shot data? Well, for each predictor variable we employ, we optimize the corresponding coefficient (α, β, etc.) to best fit the data. What do we optimize on? Something known as the log-likelihood. The process of maximizing the log-likelihood is beyond the scope here, but if you are interested in the process, here is a good jumping off point. For a slower-paced visual demonstration, StatsQuest does a good job.

The xG model

Our goal is to create a model to accurately describe our existing data as well as possible, and to eventually predict future events. Before applying logistic regression to our entire dataset, we must split our data into training and test sets. The training set serves at the data we build our model on, and the testing set is the data we use to evaluate how well our model performs. Fitting the training data to the logistic function will produce coefficients for our predictors ( I will do this by using the Scikit-learn library in Python). If we start by only fitting the distance variable, we should arrive at the optimal parameters to describe the training data:

Now if we map this function onto the sample data from figure 4:

Figure 6

While the graph above produces some certification that our coefficients produce a reasonable fit, it is not very clear graphically how good this fit is, especially considering this is just a small sample of our training data. Instead of measuring the goodness of fit numerically, let’s examine another graphic. As we constructed in Part I, we can bin the data by distance, calculate the ratio of shots that resulted in a goal per bin, and then scatter plot the bins onto a graph. If we now superimpose the logistic model onto the scatter plot, we can see how well the function maps the data.

Figure 7:

Note: the number of bins we choose will impact how the trajectory of the data looks on this graphic

Remember that we did not fit our model to the points in figure 6 and 7, but rather to the 32,000 shots in our training set. The purpose of these graphs is to gauge where our model performs well and where it does not. Plotting 32,000 points on a graph is not great for visualization, so we decide to plot a sample representation of the population.

We can see from figure 7 that the model predicts the data well for values greater than 6 meters, yet it undervalues the probability of goals from closer distances. This is the sort of advantage a graphical approach will offer. We can try to better predict shots closer to goal by adding a quadratic term to the logistic function. That is,

Figure 8

Now that is an improvement! If we add a quadratic term for the distance variable, we do a much better job at predicting shots close to goal. A numerical evaluation does not offer us this luxury. This sort of analysis is more of an art and less of a science, so it is important to play around with different options. Of course this is merely an ad hoc method of evaluating our model, for the purpose of graphically representing how the model compares to the data. We will explore a more concrete method of assessing the accuracy of the model soon, but let’s first add the angle predictor to the mix. We fit these two variables to the data and graph the probability via a contour map onto a pitch:

Figure 9

This represents the simplest expected goals model. If we flip back to part one and compare the two dimensional density plot with the xG model, we see that our two parameter model matches the probability distribution to a reasonable extent. Now for a closer look at the contour plot:

Figure 10

This is where we need to invoke some footballing knowledge. While the probability of values in central positions resembles the density plot from Part I, they are far too large for small angle positions near the goal line. Is it at all plausible to score from 5 meters out and effectively zero angle with the goal? Never mind having a 15% chance! This is a clear flaw with this simplified model. This does however represent a chance to experiment with different possibilities and variables. We can choose to add polynomial terms and interaction terms (such as δ*distance*angle) or add another variable all together. I tried a number of options and found that adding a “distance to center” of the pitch variable to produce the most reasonable contour plot:

Figure 11

Adding this new variable decreases the chance of scoring from small angles close to goal, but does little for further distances. This is probably because there are few shots taken from those positions and of the shots recorded, some might be miss-hit crosses that resulted in unlikely goals. Players rarely take shots from these wide, low angle positions. If we had 10 seasons worth of data, we would see the model begin to undervalue those types of shots. We could also choose to add artificial data in the form of misses on the goal line to fix this flaw, but for now we choose to live with it. Remember that while I have tried to add polynomial factors and this new variable to fix this flaw, the optimization in logistic regression will still fit the training data best it can. That is, as much as I want to tell the model to undervalue these types of shots, logistic regression only knows how to maximize the likelihood. I can merely add more flexibility to the model but I cannot tell it how and where to curve.

While we have visualized these models, we still do not have anything concrete on how well they perform at predicting future data. If we also want to add more variables, such as a categorical header variable, the visualization is going to become more cumbersome and difficult. For that we are going to need to employ some statistical analysis.

Model Evaluation

If we want to assess the accuracy of our model, we have to test how well it can predict future events. But this raises another concern. How do we classify a shot with our new expected goals model? Unlike the Heaviside function, which gives hard classifications, the logistic regression model returns a probability of a shot resulting in a goal.

In order to make a classification, we have to define a threshold. This threshold essentially splits the logistic function, assigning goals for where the model lies above the threshold and misses below. For example:

Figure 12

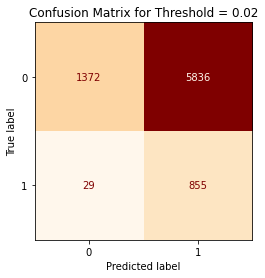

With a threshold of 0.3, the logistic model produces four possible classifications, which can be summarized in a confusion matrix.

Figure 13

From the confusion matrix, we can gather for which classifications the model gives promising predictions and for which it does not. For the example above, the model does a fantastic job of predicting misses but a poor job of predicting goals, and this is understandable if we examine where the threshold intersects the model. We can define the model’s ability to correctly predict goals with a metric known as sensitivity:

The model’s ability to correctly predict a miss is given by the specificity.

For the threshold value that we examined above, the model would produce the following confusion matrix on the entire testing data:

Sensitivity =19.6% Specificity= 97.4%

So what if we choose a much lower threshold value?

Figure 14

Sensitivity =96.7% Specificity= 19.0%

Now we have the opposite effect. The logistic regression model produces a better classification of goals but misclassifies misses at a much high volume. If we split the difference and choose a threshold in between the previous two:

Figure 15

Sensitivity =77.8% Specificity= 61.0%

We now predict goals and misses at a more balanced rate. We have seen that three different thresholds produce vastly different predictions from our model. Where they vary is in their specificity and sensitivity; that is, their ability to correctly predict goals and misses. It’s a tradeoff. So, what threshold do we choose? Well, neither of them and all of them.

Let’s take a step back. If we were using a logistic regression model to identify if a patient has cancer, then we would adopt a high threshold, to forgo a high specificity rate in favor of high sensitivity. We would much rather give out false positive results rather than false negatives. We do not want someone to walk away thinking they passed a cancer screening when in fact they have cancer. When we are trying to model goal probability, we do not have such a preference. In fact, trying to predict goals outright is not all that useful to begin with. As we will see in the next part, the power of the expected goal model does not lie in making a prediction for a single shot. So what was the purpose of investigating thresholds and confusion matrices? We can use them to compare different models using something known as a Receiver Operator Characteristic (ROC) graph.

Figure 16

Using a small step size, the ROC curve plots the model’s ability to predict goals correctly versus its ability to incorrectly predict misses for different threshold values. As you move up the y-axis, the model better predicts goals, and as we move to the left along the x-axis, the model better predicts misses. It essentially maps the trade-off between predicting goals and predicting misses. The dashed line represents a model that has no predictive power and is essentially useless because for every correct classification, it also predicts an incorrect classification. Therefore, the further away our ROC curve is from the 45 degree line, the better overall job it does at classifying the test data. Another way of looking at it is that the larger the area under the curve, the better our model is in describing the test data. This is useful to us because we can use it to compare different models and see if there is any substantial advantage to adding more variables to our model.

Figure 17

Now we have something concrete when assessing our model. If we look closely, a model with distance and angle as input variables produces the same area under the curve (AUC) as the same model but with an added “distance to center” parameter. So, contrary to some of the assumptions we made earlier, the “distance to center”predictor does not add much to the performance of our model and we should exclude it. While the “distance to center” parameter showed a slight change to the model in areas close to the goal line, this change meant very little as so few shots are taken from those positions. The AUC therefore tells us that adding the center to distance variable is rather useless. Note that we could have just as easily used p-values to learn if the center to distance parameter is useful towards our model.

Another advantage of the ROC curve is if we chose to use other classification techniques, such as SVM, random forests, neural networks, etc, we could compare and contrast the performance of those model to the one we created here. I think this is overkill for the problem at hand, but is an avenue for further exploration. While these alternative models would produce similar results, logistic regression provides us with a fairly simple and digestible method to describe shot results, whereas these other methods require a deeper and more sophisticated understanding of machine learning techniques.

Before we close, I want to briefly touch on R-squared values. In linear regression, R-squared measures the percentage of the response variable variation that a linear model explains. An R-squared of zero means the model does not explain any of the variation in the response and an R-squared of one represents a model that perfectly describes the data. R-squared does not directly translate to classification and logistic regression, but there are a number of pseudo R-squared values that mimic the linear regression version. The most popular, and the one I have employed, is Mcfadden’s R-squared, which measures the log likelihood of a null model (essentially just an intercept value) versus our model. We can interpret it in a similar fashion to the analogous linear regression R-squared. Values approaching one represent a model that perfectly classifies the data (such as the example we used to introduce the Heaviside function). For our best model based on the AUC (using distance, angle and header variables), Mcfadden’s R-squared comes out to about 0.171. Now before you throw out the xG model, we must understand that there is no definite answer to what value represents a good model and what represents a bad model. Goals, as we have discussed earlier, are mostly random. We need to remember that every shot is unique, comprised of hundreds of different variables that we have tried to model using just three. For that we should not expect to predict goals with certainty or anywhere near it. Some of this is due to our inability to model all these variables. Consider that while distance and angle give us a good sense of the likelihood of shot resulting in a goal, we did not take into account where the goalkeeper is positioned, if the shot was taken with a weak foot or strong foot, shot height at point of contact, the game state, home field advantage, if there are many bodies between the goal and the shot, etc. These are just a handful of the quantifiable variables that have an influence. There are also variables that are not easily quantifiable: what is the state of the pitch (as in rugged or is it a carpet), is fatigue playing a role, morale and confidence, and other soft factors. Even after neglecting these other variables, there is also some level of intrinsic randomness to shots. We have tried to model a very complex situation with a simple 3 parameter model; we should not expect such a high R-squared value. Nonetheless, xG is far from useless and in fact has been revolutionary to the way we approach the game.

In the next and final part, we will explore applications of the expected goals model, its strengths, weaknesses, the dangers of extrapolation and hopefully prove that expected goals are worthwhile. Until then.

![Figure 5: The logistic function takes any value in the domain (−∞,+∞)and produces a value in the range (0,1). Thus, given a value 𝑦, we can interpret G(y) as a conditional probability that the shot results in a goal, 𝐺(𝑦)≡Pr[label is 1|𝑦].](https://images.squarespace-cdn.com/content/v1/5eb2d59a8746c9483ecc9fe8/1611453392945-BCXALAJKRUI0V867RWVH/Log_function.png)